Listening City looks at how radical sensitivity can be embodied by AI city infrastructures and how this extreme “smartness” can change the behavior of even our most passing comments.

Individual Project at ArtCenter College of Design

Project shown at Ars Electronica

Specification

Design Research, Speculative Design, Artificial Intelligence

Execution

Unity, IBM Waston API, Motion Graphic, Arduino, Interactive Prototype

With the advancement of artificial intelligence technology, more and more products and services will start incorporating with sentient AIs, in hopes of elevating the quality and efficiency of their business when facing consumers. However, understanding the ambiguity of spoken language is still a challenging issue in AI development, and the bias embedded in the training model is yet another subject we need to pay attention to.

When AI's apprehension and human's intention misaligned.

If HAL-9000 was Alexa (YouTube)

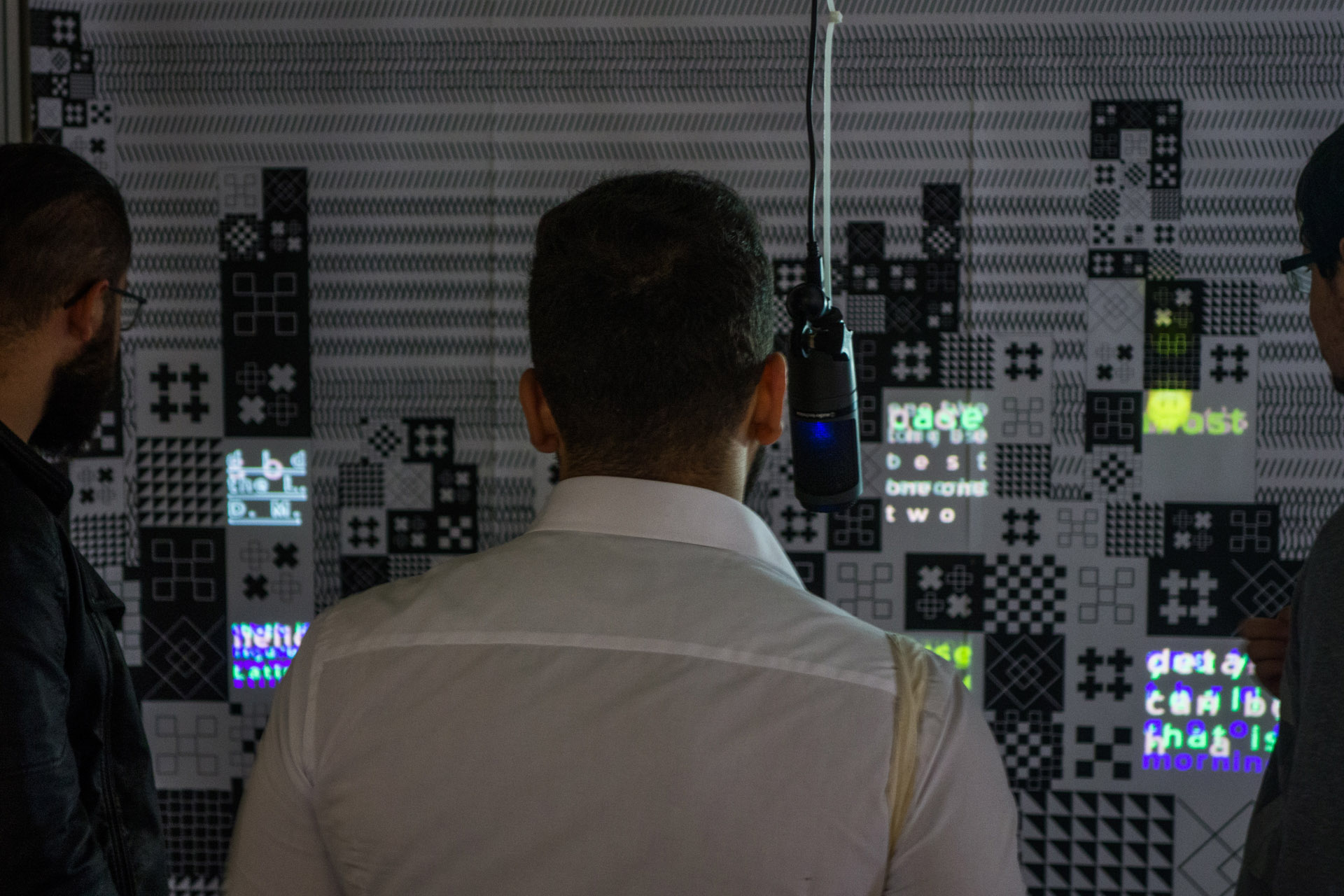

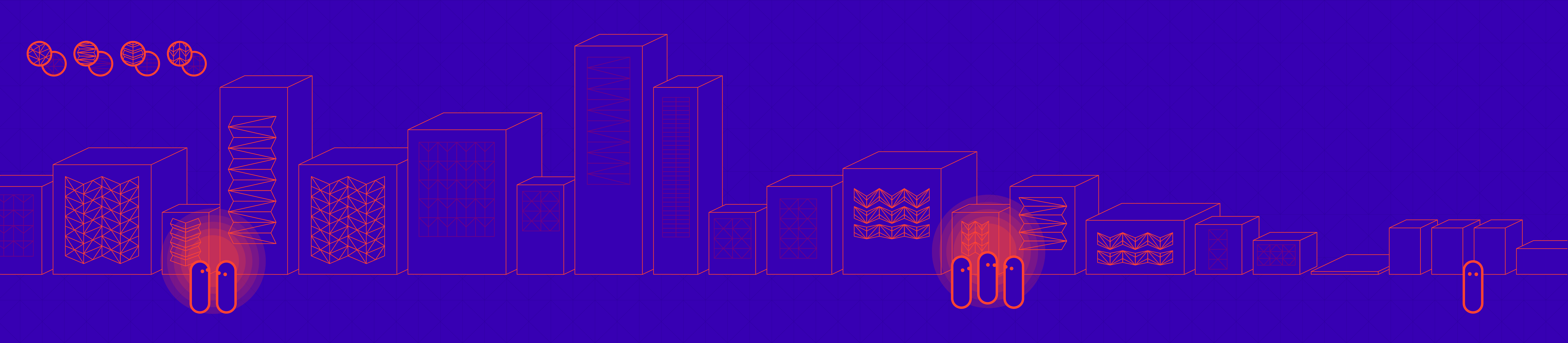

Listening City is an interactive installation using IBM Watson's Speech-To-Text and Tone Analyzer to visualize what the viewer says and what the machine apprehends. By categorizing the detected dialogues into roughly ten different emotions and intents, there will be sets of corresponding emojis that burst out from different parts of a city silhouette to illustrate a world with ubiquitous AI agents that listen and respond to the residents.

This project plays with the primitive semantic recognition and seeks to discover how viewers will adapt their wording/phrasing to obtain a specific outcome from the AI.

How will people change their spoken behavior to make AI understand their intent?

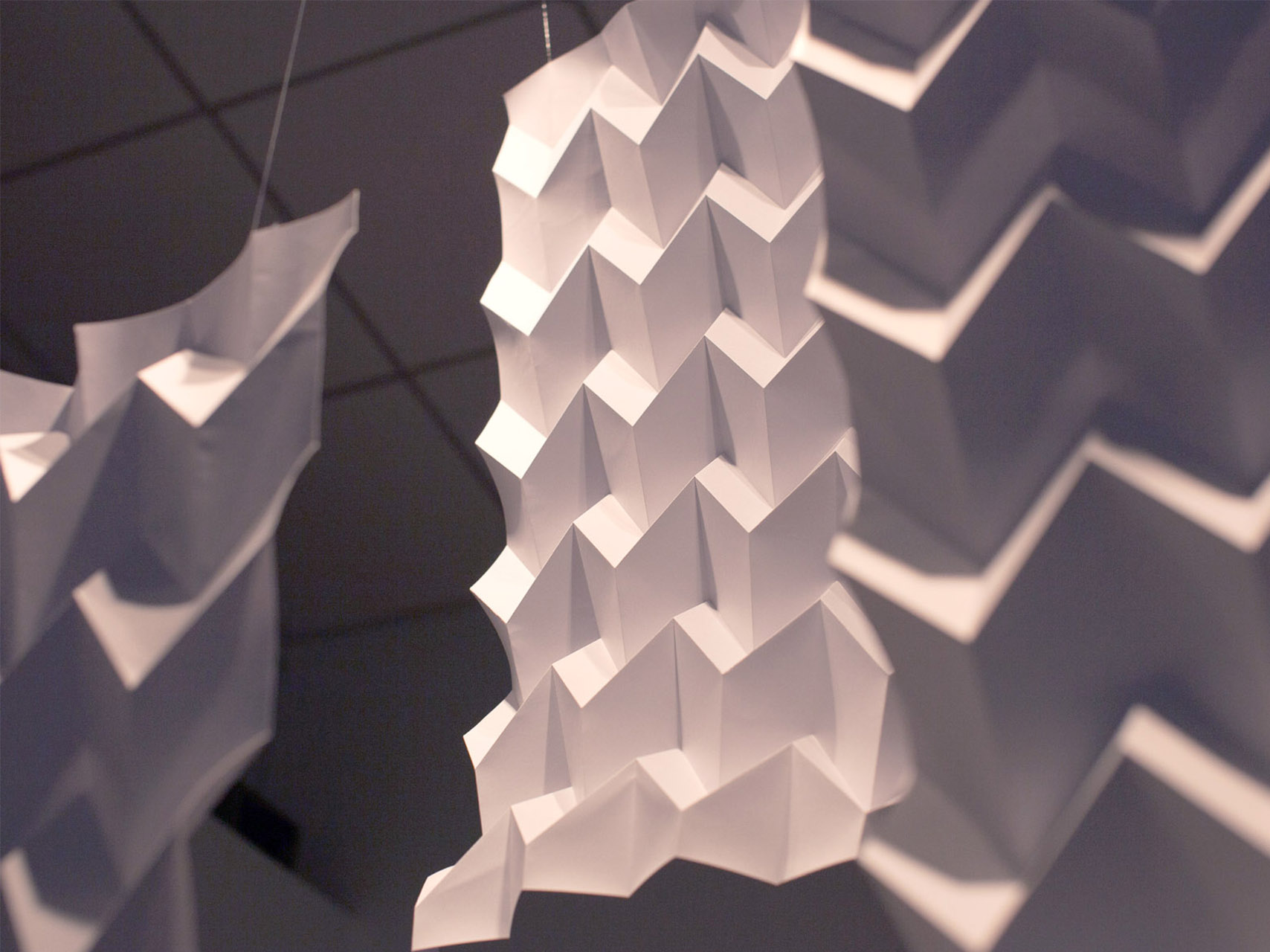

The material exploration includes a real-time speech analysis demo and a physical artifact that expresses "responsiveness." For the phsyical artifact, I came up with the origami-like structure to illuminate the idea of a responsive architecture, which allows users to be immersed within.

In an imagined world where buildings are embedded with various sensors that provide IoT ecology with a full spectrum of data: air quality, traffic, weather, etc. Due to the scale of the sensor quantity, they have to optimize their working schedule to achieve the maximum energy efficiency of the IoT system. Therefore, there is an AI governing the sensors' activation based on the amount of user demand.

To decide whether or not a data demand is taken into the AI's consideration, the communication among the residents will be analyzed to serve as a trigger for their data requests. If a positive communication is detected, the AI will initiate the sensors that feed data to all the interlocutors' common data requests.

This process aggregates likeminded residents to the same area and forms the distinct character of each "data neighborhood." Speculating from the same vein, when a user starts receiving a type of data after entering a neighborhood, that user must have a common mindset as the locals.

However, to those who do not get along with other locals, they will have a hard time living in some data neighborhoods since the only way to receive data depends on their commonality with other residents. They can either choose to relocate to another neighborhood that shares the same mindset, or to live a dataless life.

This design speculation plays out what happens when the Neighborhood AI uses its classification system for matching up residents for the “greater good” of making the community more efficient and radically optimized, which brings up the following thoughts.

AI seems so fine and dandy on the exterior and even shows how hard it is working in its dynamism and expressive form. However, do interactions in the public become performative because the walls are literally listening? And when it comes to private realms without AI to “smooth” out the interactions, how do the behaviors of its residents change? What is real and not real? What is an act and not?

On a broader level, how does a radically sensitive city with an AI living as its infrastructure play out? What does radical responsiveness look like at the urban scale? How much effort do citizens need to give if the computational city is already relentlessly attentive? What is the role of the citizen in operating these kinds of massively scaled AI systems?

Listening City had another visual iteration and was showcased in Ars Electronica: Artificial Intelligence in Linz, Austria along with other Media Design Practices works.